Command Palette

Search for a command to run...

FirstAidQA: A Synthetic Dataset for First Aid and Emergency Response in Low-Connectivity Settings

FirstAidQA: A Synthetic Dataset for First Aid and Emergency Response in Low-Connectivity Settings

Saiyma Sittul Muna Rezwan Islam Salvi Mushfiqur Rahman Mushfique Ajwad Abrar

Abstract

In emergency situations, every second counts. The deployment of Large Language Models (LLMs) in time-sensitive, low or zero-connectivity environments remains limited. Current models are computationally intensive and unsuitable for low-tier devices often used by first responders or civilians. A major barrier to developing lightweight, domain-specific solutions is the lack of high-quality datasets tailored to first aid and emergency response. To address this gap, we introduce FirstAidQA, a synthetic dataset containing 5,500 high-quality question answer pairs that encompass a wide range of first aid and emergency response scenarios. The dataset was generated using a Large Language Model, ChatGPT-4o-mini, with prompt-based in-context learning, using texts from the Vital First Aid Book (2019). We applied preprocessing steps such as text cleaning, contextual chunking, and filtering, followed by human validation to ensure accuracy, safety, and practical relevance of the QA pairs. FirstAidQA is designed to support instruction-tuning and fine-tuning of LLMs and Small Language Models (SLMs), enabling faster, more reliable, and offline-capable systems for emergency settings. We publicly release the dataset to advance research on safety-critical and resource-constrained AI applications in first aid and emergency response. The dataset is available on Hugging Face at https://huggingface.co/datasets/i-am-mushfiq/FirstAidQA.

One-sentence Summary

Muna et al. from Islamic University of Technology introduce FirstAidQA, a synthetic dataset of 5,500 high-quality first aid question-answer pairs generated via ChatGPT-4o-mini using prompt-based in-context learning and human validation, addressing the scarcity of domain-specific emergency response data to train lightweight LLMs and SLMs for offline-capable systems in time-sensitive, low-connectivity scenarios.

Key Contributions

- Identifies the critical absence of domain-specific datasets for first aid as a barrier to deploying lightweight AI in low-connectivity emergency scenarios and introduces FirstAidQA, a synthetic dataset of 5,500 question-answer pairs generated via ChatGPT-4o-mini using in-context learning from the Vital First Aid Book with rigorous preprocessing and human validation.

- Validates dataset safety and accuracy through expert evaluation of 200 randomly sampled pairs by three medical professionals, assessing criteria including safety completeness and relevance while documenting flagged examples for cautious handling as evidenced in the provided evaluation tables.

- Enables offline-capable emergency response systems by structuring FirstAidQA specifically for fine-tuning small language models, building on methodologies proven effective in prior resource-constrained medical applications like Cahlen's offline first-aid systems.

Introduction

The authors address a critical gap in emergency response tools for low-connectivity regions where immediate, accurate first-aid guidance can save lives but internet access is unreliable. Prior solutions like FAQ-based chatbots or commercial voice assistants often omit evidence-based steps or provide incomplete instructions, while existing medical QA datasets focus on clinical records or general health information—not actionable, step-by-step first aid for laypeople. Synthetic datasets like Self-Instruct or Offline Practical Skills QA demonstrate LLMs' potential for scalable data generation but lack first-aid specificity. The authors' main contribution is FirstAidQA, a purpose-built synthetic dataset generated to deliver reliable, guideline-compliant first-aid instructions offline, overcoming the absence of dedicated resources for this high-stakes domain.

Dataset

- The authors introduce FirstAidQA, a synthetic dataset comprising 5,500 question-answer pairs focused on first aid and emergency response scenarios. It is generated using ChatGPT-4o-mini via prompt-based in-context learning, with source material exclusively drawn from the certified Vital First Aid Book (2019).

- Key category details include:

- Total size: 5,500 QA pairs spanning 15 emergency categories (e.g., CPR, burns, fractures, head injuries, bleeding management).

- Source: Text chunks from the Vital First Aid Book, manually segmented to preserve context (e.g., casualty movement protocols or burn treatment steps).

- Filtering: Irrelevant theoretical content was excluded; only text applicable to real-world emergencies was retained for QA generation.

- Safety rules: Prompts explicitly constrained the LLM to generate answers strictly from provided context chunks, with diversified topic sampling to reduce bias.

- The dataset supports instruction-tuning and fine-tuning of lightweight LLMs/SLMs for offline deployment in low-connectivity environments. The authors use the full dataset (without specified train/validation splits) to train models requiring rapid, reliable emergency guidance, emphasizing practical procedural knowledge over clinical diagnostics.

- Processing includes contextual chunking of source text, structured JSON-formatted output generation (20 QA pairs per prompt batch), human validation for accuracy/safety, and iterative refinement to ensure diversity (e.g., adding pediatric/elderly scenarios). No cropping strategy is applied; instead, context-preserving chunks maintain situational relevance for edge-device deployment.

Experiment

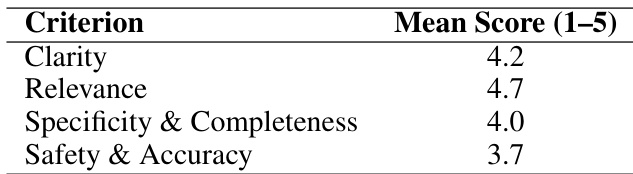

- Expert evaluation of 200 randomly sampled QA pairs by three medical professionals validated clarity, relevance, specificity and completeness, and safety and accuracy, with mean ratings documented in Table 2

- Tables 3 and 4 highlight specific QA pairs containing potentially unsafe instructions that require cautious handling during dataset utilization

The authors use expert evaluation to assess 200 QA pairs across four criteria, with scores averaged across three medical professionals. Results show the highest mean score for Relevance (4.7) and the lowest for Safety & Accuracy (3.7), indicating that while questions are well-targeted and clear, some answers may contain medically inaccurate or unsafe content.